Neural Style Transfer

Introduction

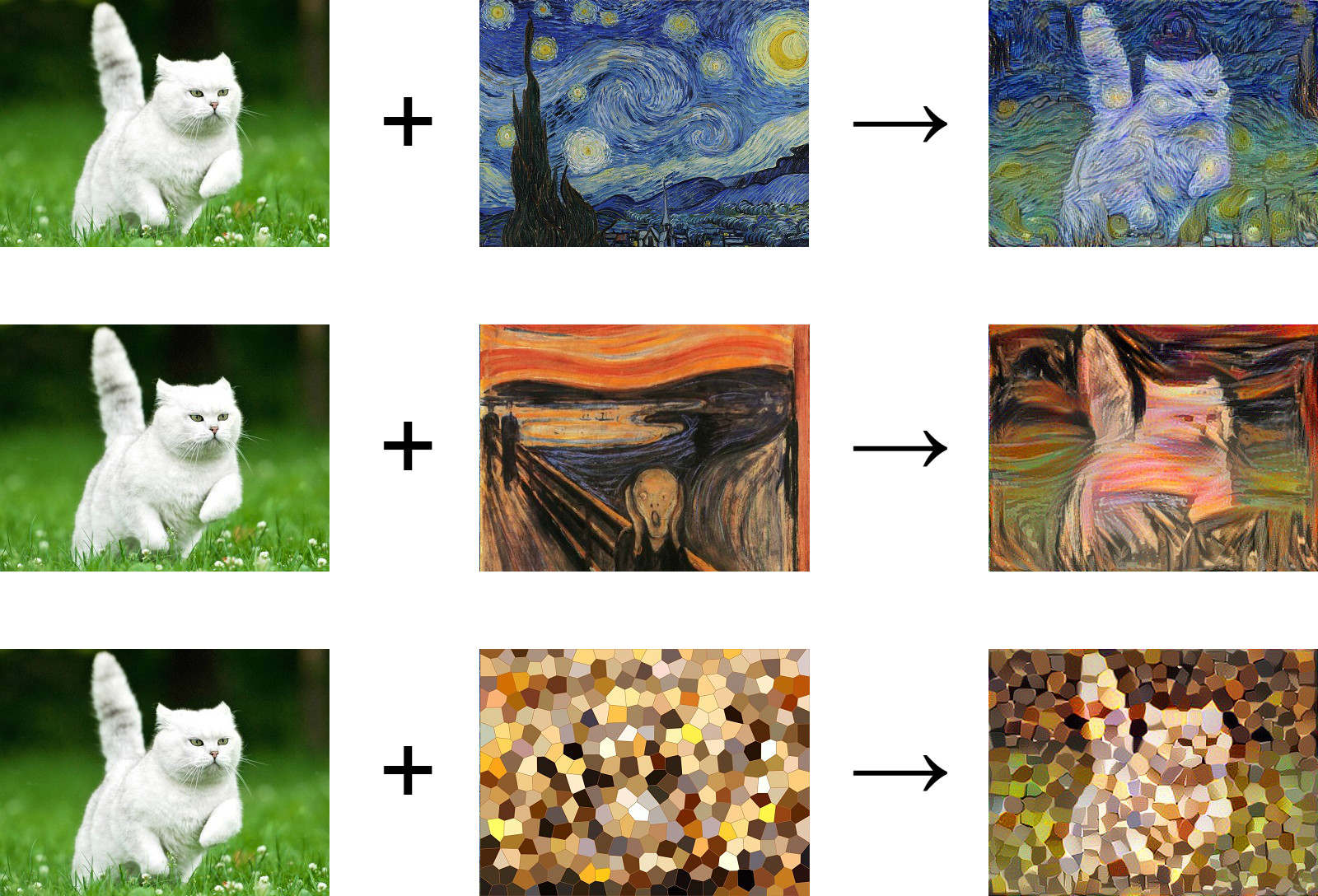

Neural Style Transfer (NST) is one of the coolest techniques in deep learning. It is an algorithm that generates a new image starting from a content image (the cat in the image above) and a style image. The objective is for the generated image to contain the “content” of the content image, but have the same “style” as the style image. NST is a form of transfer learning which uses a pretrained convolutional neural network (CNN). Instead of training the weights of the network, we train the generated image, which is used as an input to network, until the objective is achieved sufficiently. The algorithm was created by Gatys et al. (2015).

I have implemented NST in TensorFlow using a pretrained VGG-19 network trained on the ImageNet dataset. To avoid having to search the Internet for pretrained weights and having to create the layers myself, I used a pretrained Keras model from keras.applications. I extracted the layers of the Keras model and used them to create a TensorFlow graph.

The source code can be found at https://github.com/CarlFredriksson/neural_style_transfer.

Implementation

Import modules

To get started we need to import some modules.

import os

import cv2

import numpy as np

import tensorflow as tf

from tensorflow.keras import backend as K

from tensorflow.keras.applications import VGG19

from tensorflow.keras.layers import MaxPooling2DDefine constants

Let us define some constants we will need.

CONTENT_IMG_PATH = "./input/cat.jpg"

STYLE_IMG_PATH = "./input/starry_night.jpg"

GENERATED_IMG_PATH = "./output/generated_img.jpg"

IMG_SIZE = (400, 300)

NUM_COLOR_CHANNELS = 3

ALPHA = 10

BETA = 40

NOISE_RATIO = 0.6

CONTENT_LAYER_INDEX = 13

STYLE_LAYER_INDICES = [1, 4, 7, 12, 17]

STYLE_LAYER_COEFFICIENTS = [0.2, 0.2, 0.2, 0.2, 0.2]

NUM_ITERATIONS = 500

LEARNING_RATE = 2

VGG_IMAGENET_MEANS = np.array([103.939, 116.779, 123.68]).reshape((1, 1, 3)) # In blue-green-red order

LOG_GRAPH = FalseThe purpose of some of these are self-apparent and the others will explained later on. Feel free to play around with different constant values and see how the results are affected.

Load and preprocess images

We need to load the content and style images, and do some simple preprocessing before they can be used as input to the network. I used the library OpenCV (cv2) for image processing.

def load_img(path, size, color_means):

"""Load image from path, preprocess it, and return the image."""

img = cv2.imread(path)

img = cv2.resize(img, dsize=size, interpolation=cv2.INTER_CUBIC)

img = img.astype("float32")

img -= color_means

img = np.expand_dims(img, axis=0)

return imgcontent_img = load_img(CONTENT_IMG_PATH, IMG_SIZE, VGG_IMAGENET_MEANS)

style_img = load_img(STYLE_IMG_PATH, IMG_SIZE, VGG_IMAGENET_MEANS)The images are normalized using the means of each color channel for ImageNet, contained in the constant VGG_IMAGENET_MEANS. Note that the order of the colors are BGR (Blue Green Red) and not RGB, because the network we will use is trained on BGR images and OpenCV uses BGR by default.

We will also need a function for saving images. Remember to add the color means that were subtracted in preprocessing.

def save_img(img, path, color_means):

"""Save image to path after postprocessing."""

img += color_means

img = np.clip(img, 0, 255)

img = img.astype("uint8")

cv2.imwrite(path, img)Initialize the generated image

The generated image is initialized as a combination of the content image and random noise. We could initialize to pure noise, but this would result in longer training time before the content of the generated image resembles the content of the content image.

def create_noisy_img(img, noise_ratio):

"""Add noise to img and return it."""

noise = np.random.uniform(-20, 20, (img.shape[0], img.shape[1], img.shape[2], img.shape[3])).astype("float32")

noisy_img = noise_ratio * noise + (1 - noise_ratio) * img

return noisy_imggenerated_img_init = create_noisy_img(content_img, NOISE_RATIO)Cost function

In order to satisfy the objective of training a generated image to have the content of the content image and the style of the style image, we need to define a cost function that can be optimized. We will define two separate parts of this function, the content cost and the style cost, which we will combine to a total cost.

Content cost

How can we make sure that the content in the generated image matches the content of the content image? In a CNN, the activations of shallow layers detect low level features such as edges. The activations of deep layers detect higher level features, such as objects like cats or cars. We will use deep layer activations to ensure that the high level features of the generated image are similar to the high level features of the content image.

Let $a^{(c)}$ be the activations of some deep layer when the content image is used as input to the network, and $a^{(g)}$ be the activations when the generated image is used. Let $n_h, n_w, n_c$ be the height, width, and number of channels of the chosen layer. The content cost is defined as:

$$ J_{content}(a^{(c)},a^{(g)}) = \frac{1}{4 \times n_h \times n_w \times n_c} \sum_{\text{all entries}}(a^{(c)} - a^{(g)})^2 $$

def content_cost(a_c, a_g):

"""Return a tensor representing the content cost."""

_, n_h, n_w, n_c = a_c.shape

return (1/(4 * n_h * n_w * n_c)) * tf.reduce_sum(tf.square(tf.subtract(a_c, a_g)))Style cost

The next question is how can we make sure that the style of the generated image matches the style of the style image? The style cost function is a bit more complicated than the content cost function. To start of we need to know how to compute the gram matrix for a set of vectors $(v_1,\dots,v_n)$. The entries $G_{ij}$ of a gram matrix are computed by:

$$ G_{ij} = v_i \cdot v_j = v_i^T v_j $$

Intuitively $G_{ij}$ is one way to measure how similar $v_i$ is to $v_j$, since if they are similar their dot product should be large. We will compute gram matrices by unrolling activations for some layer into a matrix with one row for each channel of that layer, and then taking the matrix product between the matrix and its transpose. The resulting gram matrix $G$ is also called a style matrix and has dimensions $(n_c,n_c)$. As an example of what the style matrix captures: if the channel (also called filter) $i$ is detecting vertical textures, and $j$ is detecting horizontal textures, then $G_{ij}$ measure how much vertical and horizontal textures occur together in the image.

Using the gram matrices $G^{(s)}$ and $G^{(g)}$ for the style and generated images respectively, the style cost for a given layer $l$ is defined as:

$$ J_{style}^{[l]}(G^{(s)},G^{(g)}) = \frac{1}{4 \times n_c^2 \times (n_h \times n_w)^2} \sum_{i=1}^{n_c} \sum_{j=1}^{n_c} {(G_{ij}^{(s)} - G_{ij}^{(g)})^2} $$

Unlike the content cost, the style cost normally uses several layers with corresponding coefficients $\lambda^{[l]}$:

$$ J_{style} = \sum_l \lambda^{[l]} J_{style}^{[l]} $$

def style_cost(a_s_layers, a_g_layers, style_layer_coefficients):

"""Return a tensor representing the style cost."""

style_cost = 0

for i in range(len(a_s_layers)):

# Compute gram matrix for the activations of the style image

a_s = a_s_layers[i]

_, n_h, n_w, n_c = a_s.shape

a_s_unrolled = tf.reshape(tf.transpose(a_s), [n_c, n_h*n_w])

a_s_gram = tf.matmul(a_s_unrolled, tf.transpose(a_s_unrolled))

# Compute gram matrix for the activations of the generated image

a_g = a_g_layers[i]

a_g_unrolled = tf.reshape(tf.transpose(a_g), [n_c, n_h*n_w])

a_g_gram = tf.matmul(a_g_unrolled, tf.transpose(a_g_unrolled))

# Compute style cost for the current layer

style_cost_layer = (1/(4 * n_c**2 * (n_w* n_h)**2)) * tf.reduce_sum(tf.square(tf.subtract(a_s_gram, a_g_gram)))

style_cost += style_cost_layer * style_layer_coefficients[i]

return style_costTotal cost

Let $\alpha$ and $\beta$ be constants. The total cost is defined as a linear combination of the content cost and style cost:

$$ J = \alpha J_{content} + \beta J_{style} $$

def total_cost(content_cost, style_cost, alpha, beta):

"""Return a tensor representing the total cost."""

return alpha * content_cost + beta * style_costCreate graph

Now it is time to create the computation graph and store the tensors that corresponds to the layer activations we are interested in. Let us start by creating a TensorFlow variable which we will use as an input to the network.

input_var = tf.Variable(content_img, dtype=tf.float32, expected_shape=(None, None, None, NUM_COLOR_CHANNELS), name="input_var")The variable is initialized to the content image, and will later be assigned the style and generated images. Now let us get the pretrained VGG-19 Keras model, extract the layers, and store the output tensors we will need. Note that the MaxPooling layers are swapped for AvgPooling layers. This gives NST better performance.

def create_output_tensors(input_variable, content_layer_index, style_layer_indices):

"""

Create output tensors, using a pretrained Keras VGG19-model.

Return tensors for content and style layers.

"""

vgg_model = VGG19(weights="imagenet", include_top=False)

layers = [l for l in vgg_model.layers]

x = layers[1](input_variable)

x_content_tensor = x

x_style_tensors = []

if 1 in style_layer_indices:

x_style_tensors.append(x)

for i in range(2, len(layers)):

# Use layers from vgg model, but swap max pooling layers for average pooling

if type(layers[i]) == MaxPooling2D:

x = tf.nn.avg_pool(x, ksize=[1, 2, 2, 1], strides=[1, 2, 2, 1], padding='SAME')

else:

x = layers[i](x)

# Store appropriate layer outputs

if i == content_layer_index:

x_content_tensor = x

if i in style_layer_indices:

x_style_tensors.append(x)

return x_content_tensor, x_style_tensorsx_content, x_styles = create_output_tensors(input_var, CONTENT_LAYER_INDEX, STYLE_LAYER_INDICES)The constant CONTENT_LAYER_INDEX=13 makes us use the activations of layer block4_conv2 for computing content cost. The constant STYLE_LAYER_INDICES = [1, 4, 7, 12, 17] makes us use the activations of the layers block1_conv1, block2_conv1, block3_conv1, block4_conv1, and block5_conv1 for computing style cost. Feel free to use other layers, or change the STYLE_LAYER_COEFFICIENTS. You can use vgg_model.summary() to see the available layers.

Create training operation

Now it is time to create the training operation. Note that the Keras session is used instead of tf.Session(), this is because we have used a Keras model and the weights for that model is contained in the Keras session. It is important to not use tf.global_variables_initializer() in this case, since the pretrained weights would be randomized. Instead we use tf.variables_initializer([input_var]). Also note that the AdamOptimizer has variables that we initialize using tf.variables_initializer(optimizer.variables()).

optimizer = tf.train.AdamOptimizer(LEARNING_RATE)

# Use the Keras session instead of creating a new one

with K.get_session() as sess:

sess.run(tf.variables_initializer([input_var]))

# Extract the layer activations for content and style images

a_content = sess.run(x_content, feed_dict={K.learning_phase(): 0})

sess.run(input_var.assign(style_img))

a_styles = sess.run(x_styles, feed_dict={K.learning_phase(): 0})

# Define the cost function

J_content = content_cost(a_content, x_content)

J_style = style_cost(a_styles, x_styles, STYLE_LAYER_COEFFICIENTS)

J_total = total_cost(J_content, J_style, ALPHA, BETA)

# Log the graph. To display use "tensorboard --logdir=log".

if LOG_GRAPH:

writer = tf.summary.FileWriter("log", sess.graph)

writer.close()

# Assign the generated random initial image as input

sess.run(input_var.assign(generated_img_init))

# Create the training operation

train_op = optimizer.minimize(J_total, var_list=[input_var])

sess.run(tf.variables_initializer(optimizer.variables()))Train the generated image

It is finally time to train the generated image! The generated image is saved every 20th iteration so you can see the progress. The following code should be inside the with K.get_session() as sess: block created above.

for i in range(NUM_ITERATIONS):

sess.run(train_op)

if (i%20) == 0:

print(

"Iteration: " + str(i) +

", Content cost: " + "{:.2e}".format(sess.run(J_content)) +

", Style cost: " + "{:.2e}".format(sess.run(J_style)) +

", Total cost: " + "{:.2e}".format(sess.run(J_total))

)

# Save the generated image

generated_img = sess.run(input_var)[0]

save_img(generated_img, GENERATED_IMG_PATH, VGG_IMAGENET_MEANS)

# Save the generated image

generated_img = sess.run(input_var)[0]

save_img(generated_img, GENERATED_IMG_PATH, VGG_IMAGENET_MEANS)Conclusion

I am happy about how the project turned out. Neural Style Transfer is a really cool technique, and it is always nice with visual results that can be appreciated by non-ML people!

The way I extracted the layers from the pretrained Keras model does seem slightly “hacky”, but overall I feel like it is a decent alternative that I probably will use again.

The source code can be found at https://github.com/CarlFredriksson/neural_style_transfer.

Thank you for reading, and feel free to send me any questions.